News Story

Bee drones featured on new Voice of America video

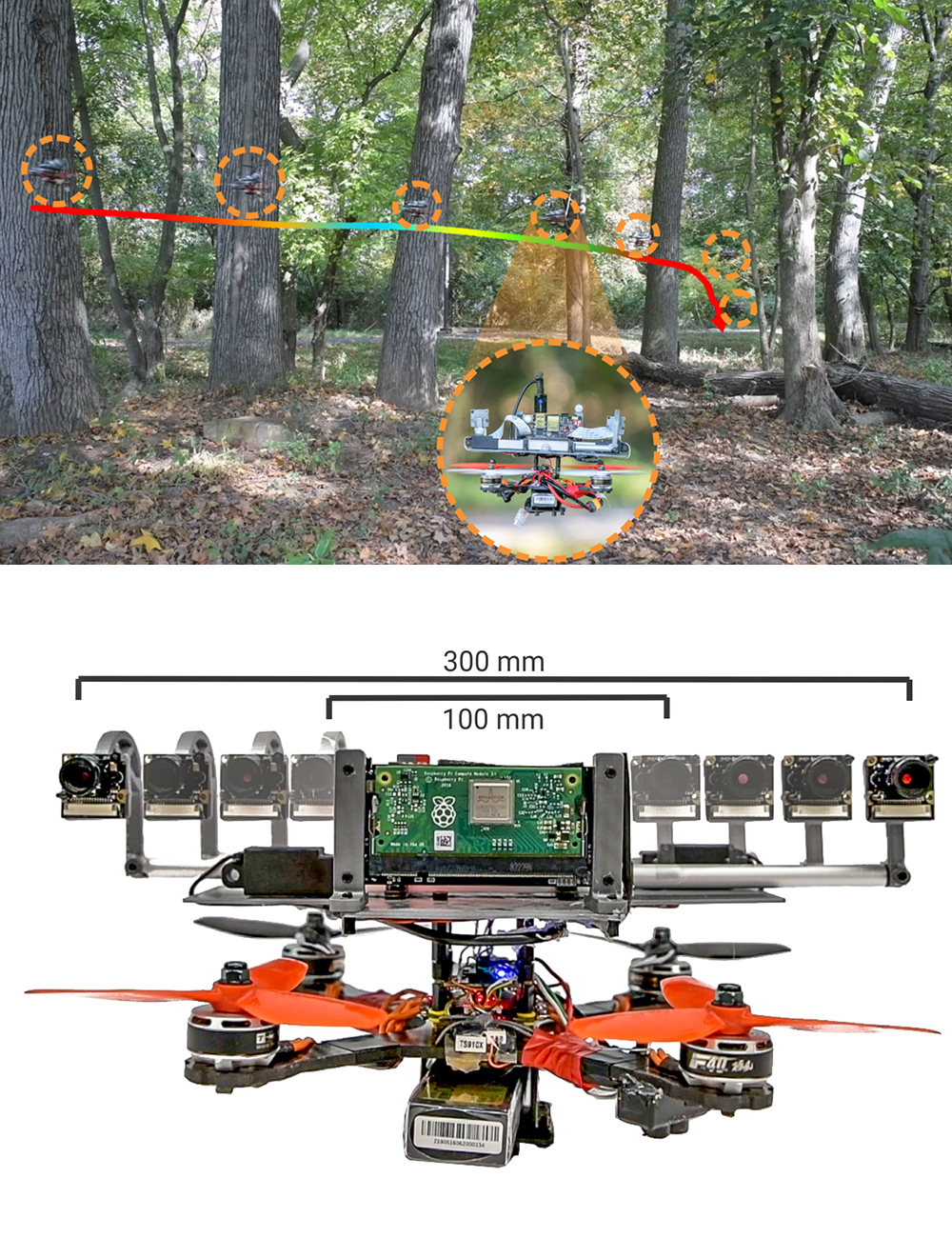

A new Voice of America (VOA) video features some of the latest active perception work of ISR-affiliated Professor Yiannis Aloimonos (CS/UMIACS) and the Perception and Robotics Group. It depicts the autonomous "bee" drones recently developed by the group and featured in this news from Maryland Today.

Integration is the most important challenge facing the robotics field. A robot’s sensors and the actuators that move it are separate systems, linked together by a central learning mechanism that infers a needed action given sensor data, or vice versa. The often-used, cumbersome three-part AI system—each part speaking its own language—is a slow way to get robots to accomplish sensorimotor tasks.

Integrating a robot’s perceptions with its motor capabilities, known as “active perception,” provides a more efficient and faster way for robots to complete tasks.

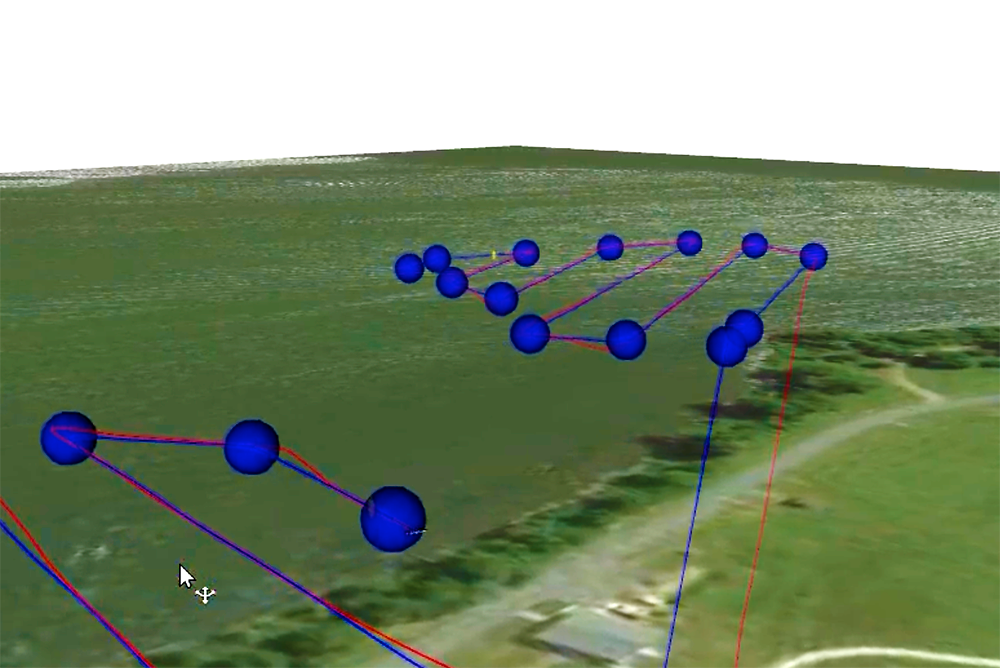

In active perception, instead of mapping the world with lidar or other systems larger robots might use, the drones are in constant motion to gain a better understanding of their surroundings. This helps them collect data about the space around them and move autonomously.

The bee drones have two cameras for 360-degree vision and use an onboard computer that can be fed various control software.

Also involved in the project are ISR-affiliated Associate Research Scientist Cornelia Fermüller (UMIACS) and Ph.D. candidate Nitin Sanket, who defended his related doctoral dissertation, "Active Vision Based Embodied-AI Design for Nano-UAV Autonomy," in July.

The video originally appeared on the VOA’s technology-oriented “Log On” show.

—Thanks to Tom Ventsias and UMIACS for this story.

Published August 3, 2021