News Story

Microrobots soon could be seeing better, say UMD faculty in Science Robotics

ISR-affiliated Professor Yiannis Aloimonos (CS/UMIACS) and Associate Research Scientist Cornelia Fermüller (UMIACS) imagine the future of insect-scale, active vision sensing systems in a July 15, 2020 Science Robotics commentary.

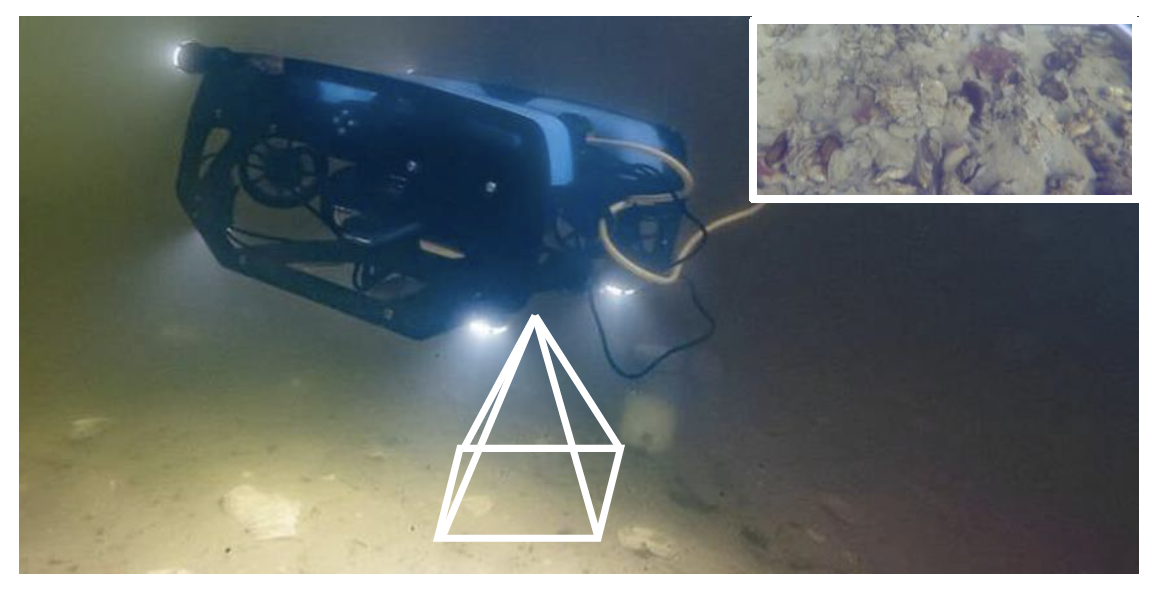

In “A bug’s eye view,” the well-known computer vision specialists consider the ramifications of research appearing in the same issue of the journal, “Wireless steerable vision for live insects and insect-scale robots,” by V. Iyer, A. Najafi, J. James, S. Fuller, and S. Gollakota. This research reports a wireless, Bluetooth-enabled, low-power, rotatable vision system that can be mounted on insect-scale robots and live insects. The research demonstrates that it is now feasible to equip insect-scale robots with an “active vision” system that allows scene selection, manipulation of the field of vision, and a capability to zoom the camera.

This is a superior system to existing microrobot schemes that rely on fixed parameters, Aloimonos and Fermüller note.

Because of their size, weight, area and power constraints, tiny robots currently cannot reconstruct scenes using simultaneous localization and mapping (SLAM) algorithms. What Iyer has done is an alternative: create a steerable camera system that can perform a specific set of tasks in a more optimal way.

Aloimonos and Fermüller imagine a future of microrobots with such steerable cameras that could find holes, successfully fly through them, avoid obstacles, and recognize a variety of objects without computing 3D world models—an alternative to SLAM.

“If the insect robotics community succeeds with this SLAM alternative,” they write, “then the results will permeate all robotics.”

Published July 15, 2020