News Story

UMD’s SeaDroneSim can generate simulated images and videos to help UAV systems recognize ‘objects of interest’ in the water

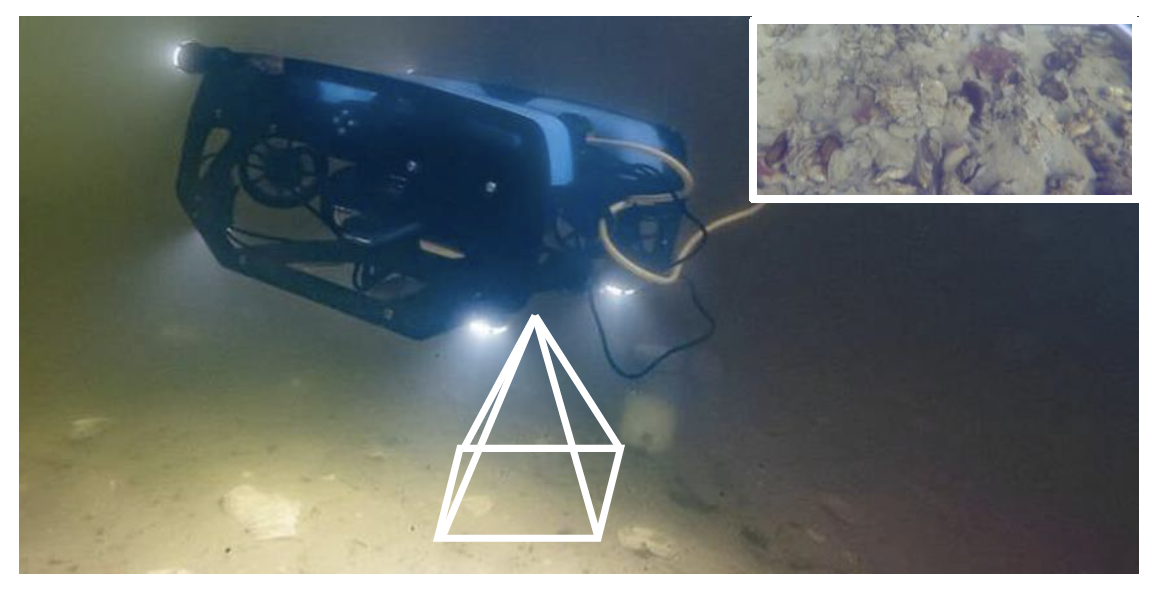

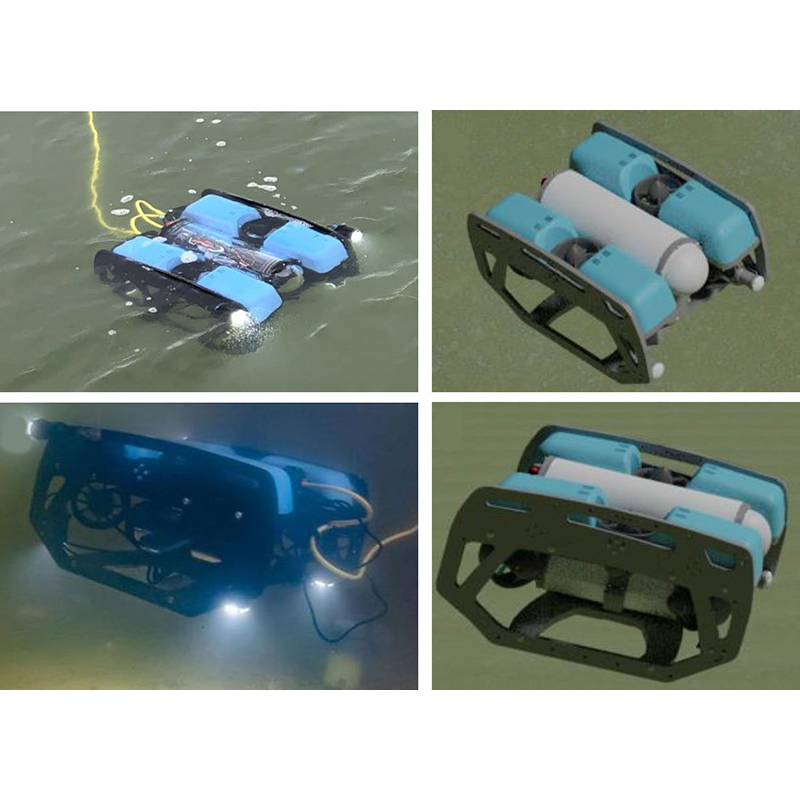

Top left: BlueROV in water. Top right: BlueROV simulated. Bottom left: Another view of BlueROV in water (note the different angle and rotation). Bottom right: The simulated BlueROV from this viewpoint. The 3D model in the simulation looks very similar to the real BlueROV. The relatively small size of objects in UAV-based images makes it possible for rendering datasets in SeaDroneSim, and to directly use the datasets for training a network to detect real objects. (Figure 3 from the paper.)

With their speed and versatility in collecting aerial images, unmanned aerial vehicles (UAVs) have become a vital supporting technology useful over both land and water. In recent years, their use has increased as UAVs have become more available, accessible and affordable. They can be fitted with technologies like high-resolution cameras and graphic processing units, which make them valuable for precision agriculture and aquaculture, land use surveys, search and rescue operations and vehicle and watercraft monitoring.

To make sense of what UAV cameras record and transmit, UAV operators rely on modern computer vision algorithms that allow them to find “objects of interest” in complex and changing environments. Currently, the usefulness of these algorithms depends on machine learning training based on large image datasets collected by UAV cameras. In maritime environments, it is time-consuming and labor-intensive to collect this information, and only a few datasets exist.

“SeaDroneSim: Simulation of Aerial Images for Detection of Objects Above Water” is a new benchmark suite developed by researchers at the University of Maryland (UMD) that allows users to generate synthetic data and create photo-realistic aerial image datasets with ground truth for segmentation masks of any given object. Early testing is encouraging that SeaDroneSim’s synthetic data can be used in place of datasets based on UAV videos of real objects in the water.

A paper describing SeaDroneSim was presented at the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision Workshops (WACVW), held in January. It was written by ECE PhD student Xiaomin Lin; MEng in Robotics student Cheng Liu; Allen Pattillo, a shellfish aquaculture technology specialist at the University of Maryland Extension; Professor Miao Yu (ME/ISR); and ISR-affiliated Professor Yiannis Aloimonos (CS/UMIACS). Lin and Liu are advised by Aloimonos, and the three are part of the Perception and Robotics Group at the University of Maryland. All authors are part of the “Transforming Shellfish Farming with Smart Technology and Management Practices for Sustainable Production” project, funded for $10 million by the USDA National Institute of Food and Agriculture in 2020 for five years. The development of SeaDroneSim is a useful component of this ongoing oyster aquaculture research effort.

The challenge of identifying maritime objects

When a large ocean or bay area needs to be searched quickly, UAVs equipped with robust computer vision-based systems can provide vital aid. This is especially important in emergency search and rescue operations such as when watercraft have capsized with people aboard. The systems need to be able to locate and track “objects of interest” under many kinds of lighting, weather conditions, image altitudes, viewing angles and water conditions and colors.

Most systems are vision-based, data-driven, and employ deep neural networks. To correctly recognize objects under diverse environmental conditions, they depend on what they have learned from large datasets. However, only a few datasets are currently available, and these are limited, both in size and in the variety of objects of interest they include. Manually collecting images for these types of datasets is slow, time-consuming, and has poor scalability. With a nearly infinite number of potential maritime objects of interest, there is a need to rapidly generate large-scale datasets that eclipse the usefulness of those currently available from UAV-captured video.

About SeaDroneSim

The UMD researchers developed SeaDroneSim, a new suite for generating simulated images of maritime-based aerial images. SeaDroneSim was built using the 3D modeling and rendering game engine BlenderTM to generate synthetic datasets for object detection. The suite can generate 3D model images and videos of any object of interest.

To study whether objects could realistically be recognized solely from SeaDroneSim-generated synthetic images, the suite was tested using the remotely operated underwater vehicle “BlueROV” as an object of interest. The research team obtained a detailed 3D computer model of BlueROV for simulating various image poses from Patrick José Pereira of the BlueROV company.

The SeaDroneSim simulation provided all the ground truth information of an aerial camera (altitude taken, rotation) and the BlueROV (rotation), and allowed the researchers to output all metadata. In the future, metadata could be used to develop multi-modal systems that would improve accuracy and speed.

The researchers then compared the SeaDroneSim detection result with different synthetic datasets generated from the simulation. They found that for data-critical applications when collecting real images is challenging, it is possible to use the 3D model of the object to create photorealistic images that will successfully detect objects in a specific domain. The research also established a baseline for detecting BlueROV in open water, a starting point for the further research involving the suite. In the future, the researchers hope to take advantage of robotics and AI advances for gathering UAV-based maritime aerial images to automate object detection and tracking.

SeaDroneSim is among the first simulations to build a UAV-based maritime image simulation focusing on object detection. The researchers have open-sourced SeaDroneSim and its associated dataset to accelerate further research, and proposed a pipeline for autonomously generating UAV-based maritime images for objects of interest and detecting them.

Published February 24, 2023