News Story

Bera, Manocha and Shim awarded a BBI Seed Grant to examine the role of age and gender on gait-based emotion recognition

MRC faculty Bera, Manocha and Shim are among the UMD FY20 Brain and Behavior Initiative (BBI) Seed Grant recipients. The BBI missionis to revolutionize the interface between engineers and neuroscientists by generating novel tools and approaches to understand complex behaviors produced by the human brain. BBI-supported research focuses the quantitative rigor and tools of STEM fields onto complex and societally relevant investigations of brain and behavior.

The 2020 BBI Seed Grant projects are highly interdisciplinary and aim to tackle a diverse set of problems and research questions, including Alzheimer’s disease, disparities in hearing, healthcare access, the influence of complex traumas on black men’s mental health, the detection and classification of human emotion based on gait, the neuroendocrine effect on brain function in the vertebrate brain, and the engineering of an organismal regulatory circuit.

More about the Bera, Shim and Manocha project:

Project title:Learning Age and Gender Adaptive Gait Motor Control-based Emotion Using Deep Neural Networks and Affective Modeling

- Aniket Bera (Assistant Research Professor, Computer Science; UMIACS; MRC; MTI)

- Jae Kun Shim (Professor, Kinesiology; Bioengineering; MRC)

- Dinesh Manocha (Paul Chrisman Iribe Professor of Computer Science; Professor, Electrical and Computer Engineering; UMIACS)

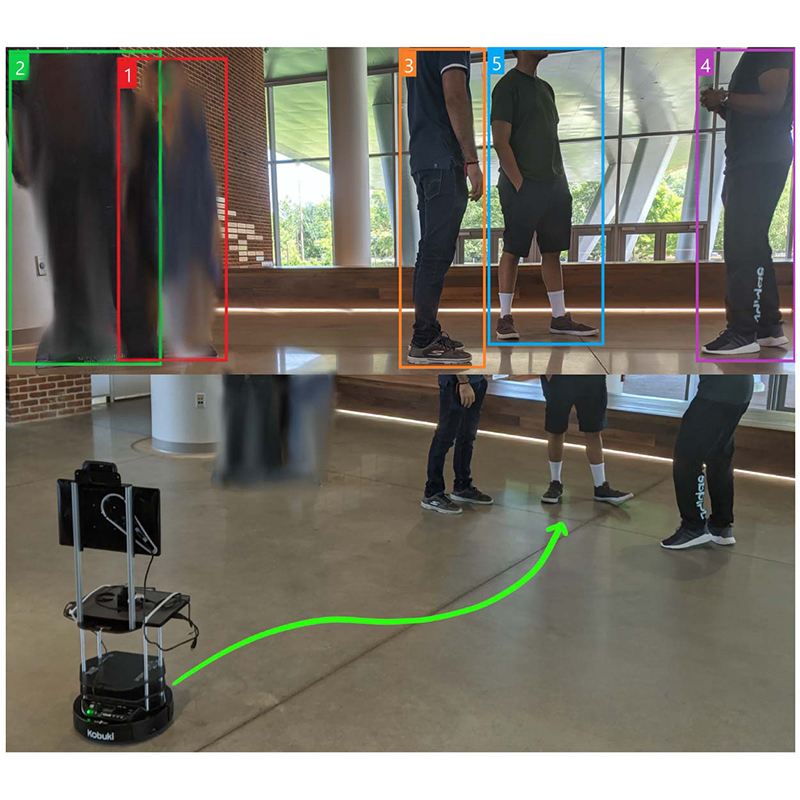

Detecting and classifying human emotion is one of the most challenging problems at the confluence of psychology, affective computing, kinesiology, and data science. While previous studies have shown that human observers are able to perceive another’s emotions by simply observing physical cues (like facial expressions, prosody, body gestures, and walking styles), this project aims to develop an automated artificial intelligence based technique for perceiving human emotions based on kinematic and kinetic variables—that is, based on both the contextual and intrinsic qualities of human motion. The proposed research will examine the role of age and gender on gait-based emotion recognition using deep learning. After collecting full-body gaits across age and gender in a motion-capture lab, Bera, Shim, and Manocha will employ an autoencoder-based semi-supervised deep learning algorithm to learn perceived human emotions from walking style. They will then hierarchically pool these joint motions in a bottom-up manner, following kinematic chains in the human body, and coupling this data with both perceived emotion (by an external observer) and self-reported emotion.

-Story courtesy of Nathaniel Underland, PhD, Brain and Behavior Initiative, UMD

Published August 14, 2020